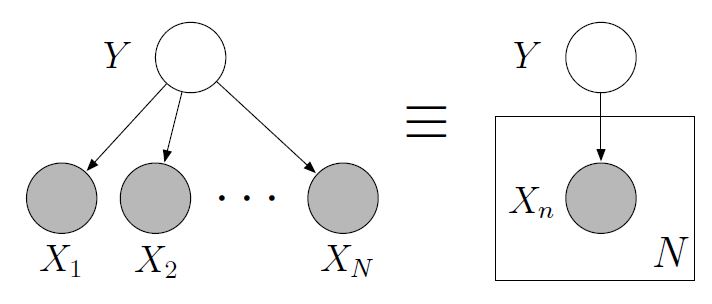

Graphical Models

- Nodes are random variables

- Edges denote possible dependence

- Observed variables are shaded

- Plates denote replicated structure

- Structure of the graph defines the pattern of conditional dependence between the ensemble of random variables

- E.g., this graph corresponds to

$$p(y, x_{1}, ..., x_{N}) = p(y)\prod^{N}_{n=1}p(x_{n}|y)$$

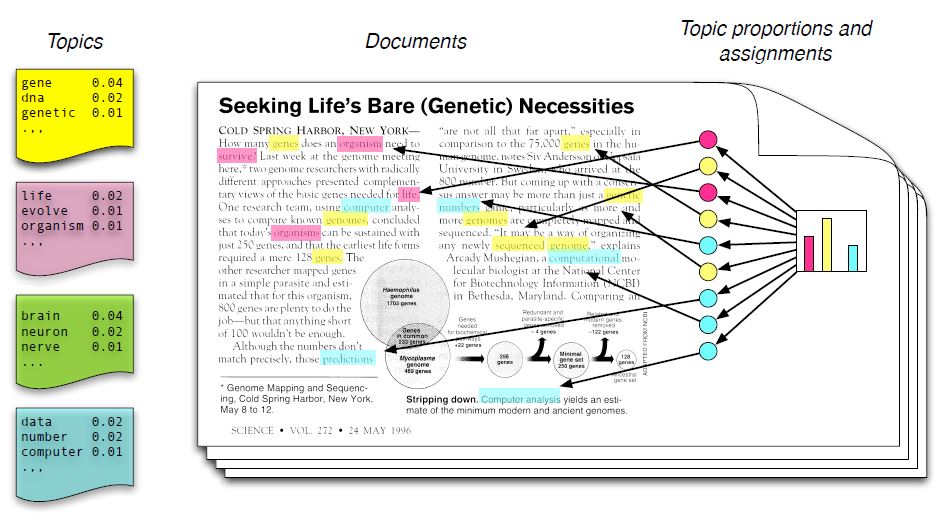

Generative Model

- Each document is a random mixture of corpus-wide topics

- Each word is drawn from one of those topics

```

```

```

```

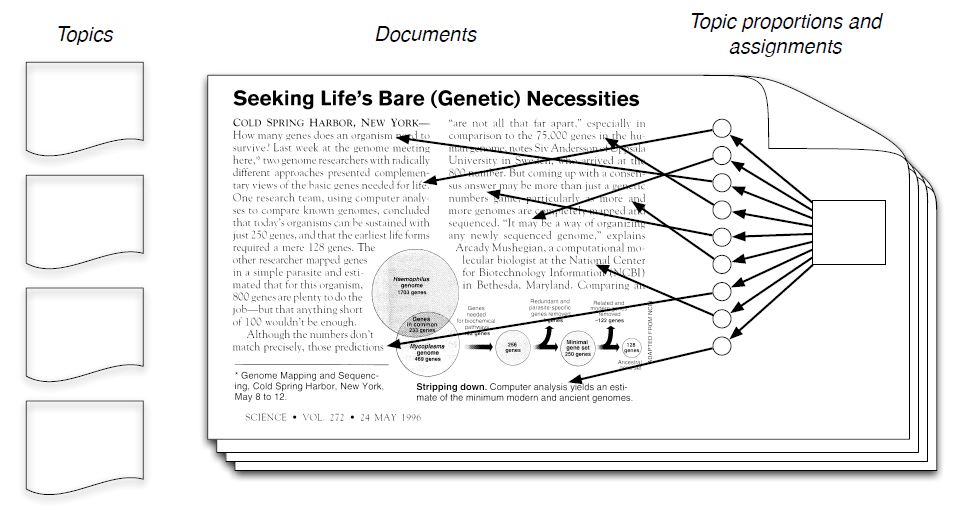

The Posterior Distribution

- In reality, we only observe the documents

- LDA is to infer the underlying topic structure

```

```

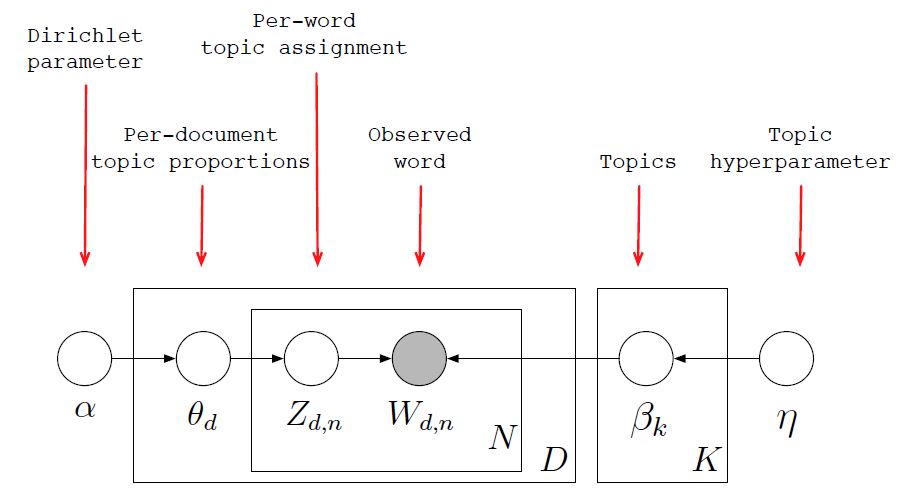

Latent Dirichlet allocation

```

```

where, ~ , ~ , ~ , ~